如何在视频中检测手的方向(旋转)?

社区干货

社区干货

「火山引擎」视频云产品月刊-2023年9月

能轻松实现在Pico和其它VR Pro中上线自己的VR视频类应用。 - 「虚拟直播间方案」,数字人开播和AR场景特效,可实现沉浸式内容观感和专业级演播能力。 **智能驾驶场景**- 基于火山引擎视频云领先的实时音视频和AI技术,推出了「远程车控方案」,可实现远程监控、平行驾驶和车载互娱。**金融审核场景**- 推出「金融直播合规方案」,火山引擎基于AI检测大模型构建的合规审核系统,可以帮助金融客户有效过滤90%以上的内容合...

2022年终总结-两年Androider的技术成长之路|社区征文

在记录和总结中我的思维和认知也有了潜移默化的变化...这里主要大致整理下不同的方向,jym如果想要提升自己的话可以从这几个方面去找资料:>- 知识管理法>- 高效时间管理,GTD时间管理法,生活黑客的时间管理>- 思... 关于通用力的总结就先到这里告一段落,在十一月份就已经把方向切换到了技术方向,接下来我们来看看在技术方向的一些学习成长吧## 关于技术的成长果然大厂的技术课程还是很丰富的,以前喜欢上网找视屏,找博客资料有...

字节跳动智能音频信号处理的应用实践

比如自适应滤波器理论的发展大大加速了回声消除在各业务场景中的应用;阵列信号处理技术则确保了声源定位以及波束形成在消费电子以及音视频创作中的效果。深度学习和心理声学技术的发展也大大加速了多模态音视频信号... 除了在视频场景的应用之外,我们还研发了全链路的高质量、低延时的 VoIP 技术。该技术相比于传统技术有一定的创新,尤其是在系统的稳定性以及声音美化的部分:* 在 **系统稳定性** 方面:基于整体的硬件状态检测,实...

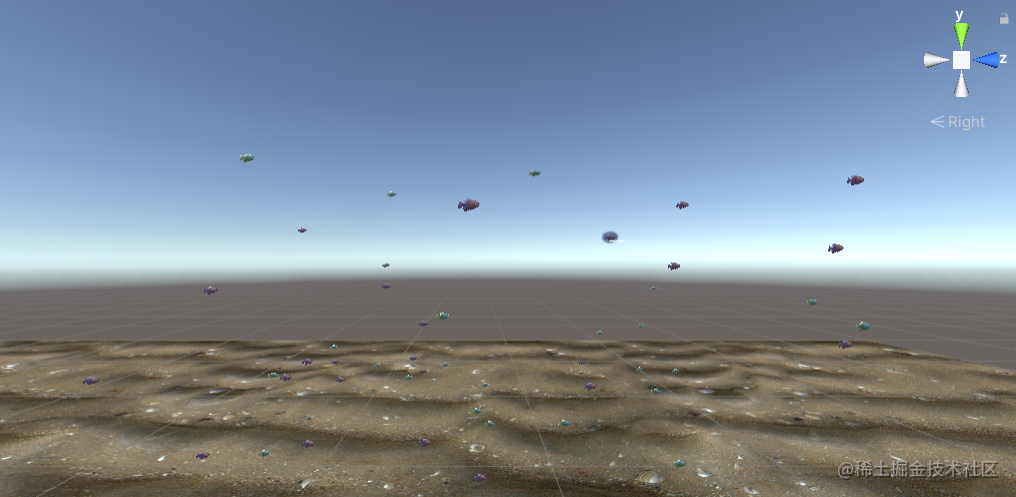

【Flocking算法】海王的鱼塘是怎样炼成的 | 社区征文

要在FlockSpeed脚本里面添加速度、方向。```c# private void Update() { speed = Random.Range(sp.min, sp.max);//速度范围 this.transform.Translate(0, 0, speed * Time.deltaTime);//开始移动 }```这时候,鱼群只会朝向Z轴移动,其他的什么也完成不了,下...

特惠活动

特惠活动

如何在视频中检测手的方向(旋转)?

-优选内容

如何在视频中检测手的方向(旋转)?

-优选内容

如何在视频中检测手的方向(旋转)?

-相关内容

如何在视频中检测手的方向(旋转)?

-相关内容

功能发布历史

图片渐进式加载最佳实践文档 使用移动端 SDK 实现图片渐进式加载 2023 年 11 月变更 说明 发布时间 相关文档 数据迁移 迁移任务页面优化 2023-11-30 数据迁移 图片处理模板 新增: 原截帧配置拆分为动图截帧和视频... 旋转至正确方向显示。 保留 EXIF 信息:支持对指定输出格式图像保留处理后的全部或部分 EXIF 信息。 2023-09-08 图片处理配置 自定义处理样式 新增:支持通过配置历史版本图片处理参数,来使用不同版本的图片处理...

「火山引擎」视频云产品月刊-2023年9月

能轻松实现在Pico和其它VR Pro中上线自己的VR视频类应用。 - 「虚拟直播间方案」,数字人开播和AR场景特效,可实现沉浸式内容观感和专业级演播能力。 **智能驾驶场景**- 基于火山引擎视频云领先的实时音视频和AI技术,推出了「远程车控方案」,可实现远程监控、平行驾驶和车载互娱。**金融审核场景**- 推出「金融直播合规方案」,火山引擎基于AI检测大模型构建的合规审核系统,可以帮助金融客户有效过滤90%以上的内容合...

基础功能

VePlayer 通过 HTML5 的

视频帧朝向

适用场景移动端采集出的视频帧默认宽大于高,并包含一个旋转角信息。根据方向不同,旋转角可能为 0、90 度、180 度、270 度。 在单流转推场景下,播放器在解码时无法处理角度信息,导致渲染出的视频帧没有预先转正。 ... 在自定义视频处理和编码环节之前,将视频帧中的旋转角处理为 0,并在整个 RTC 链路中传递调整后的视频帧。单流转推场景下,建议根据业务需要固定视频帧朝向为 Portrait 或 Landscape 模式。移动端开启视频特效贴纸,或...

SDK 概览

需要在调用 start 接口之前调用。需要特别注意,调用 vePhoneEngine prepare 函数就是正式开始使用 SDK 了。因为 SDK 在运行过程中需要采集必要的用户信息,所以在调用 vePhoneEngine 的 prepare 函数前,必须提示并获得用户授权。详细信息,参考 SDK prepare 函数。 申请云手机服务时,新增通过 videoRotationMode 参数指定视频旋转模式,支持 SDK 内部对视频画面进行方向处理。详细信息,参考 开始播放。 新增 “设置/获取视频旋转模...

功能特性

可通过算法手段对图像中包括文字的人眼感兴趣区域进行识别检测,并使用不同参数编码,达到码率分配更优的目的。 ALPHA 编码:对于包含透明图的场景建议开启,达到使图像能够具有透明背景或透明部分,使其能够与其他图像... 自适应旋转:对于原图为 jpeg、webp、png、avif、tiff、heic 格式时将会根据原图中 EXIF 旋转信息,先自动旋转至正确方向显示后再进行图片处理。 保留 EXIF 信息:图像处理或编辑过程中,保持原始图像文件中的 EXIF...

图片处理配置

计费概述图片处理配置的不同配置项分属不同计费项,其中输出为 HEIC、HEIF、AVIF、AVIS 和 VVIC 格式时为高效图像压缩服务计费,截帧、小视频转动图属于增值服务计费项,此外其他配置均属于基础图像处理计费项。具体... 全局最优:从动图首帧开始逐帧检测并返回亮度最大的一帧。 超时时间:指定处理超时时间,若在指定时间范围内处理未完成则返回失败。取值范围为[100,10000],单位为 ms。默认为 1500。 视频截帧:智能模式:从视频首帧开...

概览

可以通过本接口指定视频采集参数包括模式、分辨率、帧率。 setVideoRotationMode 设置采集视频的旋转模式。默认以 App 方向为旋转参考系。接收端渲染视频时,将按照和发送端相同的方式进行旋转。 setLocalVideoCanv... isCameraExposurePositionSupported 检查当前使用的摄像头是否支持手动设置曝光点。 setCameraExposurePosition 设置当前使用的摄像头的曝光点 setCameraExposureCompensation 设置当前使用的摄像头的曝光补偿。 ...

客户端 SDK

新增通过 streamType 参数指定拉取音视频流类型,支持启动游戏时默认静音的场景。详细信息,参考 开始播放。 申请游戏服务时,新增通过 debugConfig 可选参数传入 JSON 字符串,用于配置 SDK 的属性(例如:海外域名配置... 修复了云游戏旋转逻辑在特殊机型上显示不全的已知问题。 iOSiOS 端 SDK 包含以下新增功能和变更: 申请游戏服务时,新增在 extra 参数列表中通过设置 enable_archive_upload 参数,设置是否在游戏结束后上传用户存...