本章节为您提供从零开始使用安全集群并执行 MapReduce 用例、Spark 用例、Hive 等用例的操作指导。

使用限制

- 现阶段仅支持创建集群时开启 Kerberos,不支持普通集群转 Kerberos 集群。

- 用户管理模块已对接 Kerby 组件,即在用户管理中添加的用户,会同时在 Kerby 中注册相应的 principal 信息,支持下载该用户的 keytab 文件。

创建安全集群

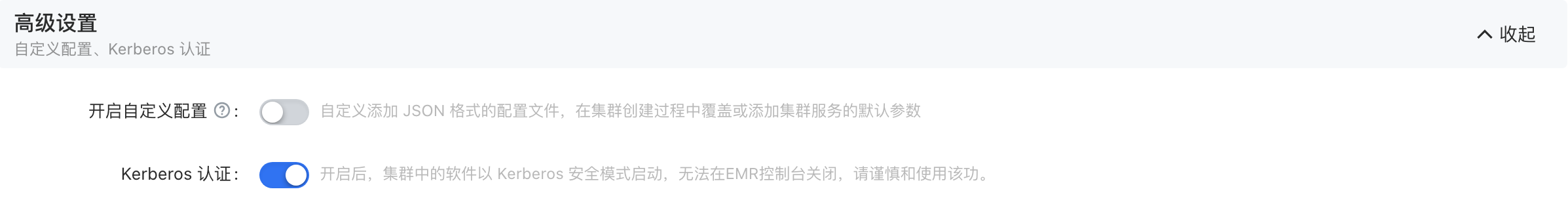

火山引擎 LAS 集群通过集成 Apache Kerby 服务为集群提供 Kerberos 能力,如果在创建集群时启用了 Kerberos 认证,则集群在创建时会集成安装 Kerby 组件,并提供基础的 Kerberos 使用环境。

其余集群操作详见创建集群。

创建用户

请参考用户管理章节创建认证用户,和下载相应的 keytab 文件。

执行 MapReduce 用例

参考用户管理章节创建 user01 用户,并下载其 keytab 文件,将其上传到 ECS 的 /etc/krb5/keytab/user 目录下。

生成用户的票据。

source /etc/emr/kerberos/kerberos-env.sh kinit -k -t /etc/krb5/keytab/user/user01.keytab user01执行 MapReduce 的 WordCount 用例。

source /etc/emr/hadoop/hadoop-env.sh hadoop fs -mkdir /tmp/input hadoop fs -put /usr/lib/emr/current/hadoop/README.txt /tmp/input/data yarn jar /usr/lib/emr/current/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.3.4-ve-1.jar wordcount /tmp/input/data /tmp/output说明

示例中

hadoop-mapreduce-examples-3.3.4-ve-1.jar,不同 EMR 的版本,该路径中 hadoop 的版本号也不一样,需要根据具体环境配置。

执行 Spark 用例

生成用户的票据

source /etc/emr/kerberos/kerberos-env.sh kinit -k -t /etc/krb5/keytab/user/user01.keytab user01也可以在命令行中添加用户认证信息:示例:

spark-submit --conf spark.kerberos.principal=user01 --conf spark.kerberos.keytab=/etc/krb5/keytab/user/user01.keytab ....执行 Spark 的 SparkPi 用例

spark-submit --master yarn --class org.apache.spark.examples.SparkPi --conf spark.kerberos.principal=user01 --conf spark.kerberos.keytab=/etc/krb5/keytab/user/user01.keytab /usr/lib/emr/current/spark/examples/jars/spark-examples_2.12-3.2.1.jar说明

不同 LAS 版本中示例中

spark-examples_2.12-3.2.1.jar的 Spark 版本号有所不同,需根据实际环境填写。执行 SparkSql 用例。

输入 spark-sql,进入 SparkSql 控制台。

spark-sql --conf spark.kerberos.principal=user01 -conf spark.kerberos.keytab=/etc/krb5/keytab/user/user01.keytab输入如下的 SQL 语句。

CREATE TABLE IF NOT EXISTS t1(i INT, s STRING); INSERT INTO t1 VALUES(0, 'a'); SELECT * FROM t1;

执行Hive用例

生成用户的票据。

kinit -k -t /etc/krb5/keytab/user/user01.keytab user01执行 Hive cli。

- 输入 Hive 命,进入 Hive 控制台。

- 输入如下的 HQL 语句。

CREATE TABLE IF NOT EXISTS t2(i INT, s STRING); INSERT INTO t1 VALUES(0, 'a'); SELECT * FROM t2;

连接 HiveServer2。

beeline -u "jdbc:hive2://${zookeeper的地址}/;serviceDiscoveryMode=zooKeeper;zooKeeperNamespace=hiveserver2;principal=${HiveServer2的principal}"示例:

beeline -u "jdbc:hive2://10.0.255.9:2181,10.0.255.7:2181,10.0.255.8:2181/;serviceDiscoveryMode=zooKeeper;zooKeeperNamespace=hiveserver2;principal=hive/_HOST@2FDE5608A274D0A2320C.EMR.COM"说明

示例中

2FDE5608A274D0A2320C.EMR.COM是 KDC 的域名,需根据具体环境填写。执行如下 HQL 语句。

CREATE TABLE IF NOT EXISTS t3(i INT, s STRING); INSERT INTO t3 VALUES(0, 'a'); SELECT * FROM t3;

执行 Flink 用例

生成用户的票据。

kinit -k -t /etc/krb5/keytab/user/user01.keytab user01执行 Flink jar 命令。

执行 Flink 自带的 WordCount 用例。

hadoop fs -put /usr/lib/emr/current/hadoop//README.txt /tmp/flink_data.txt flink run -m yarn-cluster /usr/lib/emr/current/flink/examples/streaming/WordCount.jar --input hdfs://emr-cluster/tmp/flink_data.txt --output hdfs://emr-cluster/tmp/flinktest_out说明

HDFS 的路径需根据实际环境修改。

查看 WordCount 执行结果。

hadoop fs -cat /tmp/flinktest_out/*/*

执行 HBase 用例

生成用户的票据。

kinit -k -t /etc/krb5/keytab/user/user01.keytab user01执行 Hive cli。

- 输入 hbase shell 命,进入控制台。

- 输入下面语句。

create 't1', 'f1', SPLITS => ['10', '20', '30', '40'] put 't1', 'r1', 'f1:c1', 'value' scan 't1'

执行 Presto/Trino 用例

说明

由于 Presto 和 Trino 的使用方式相同,下面以 Presto 为例介绍。

执行 Presto 命令:

presto \ --krb5-principal=${用户的principal} \ --krb5-keytab-path=${principal对应的keytab文件} \示例:

presto --krb5-principal=user01 --krb5-keytab-path=/etc/krb5/keytab/user/user01.keytab输入 Presto 的 sql 语句

select * from tpch.sf1.nation:presto> select * from tpch.sf1.nation; nationkey | name | regionkey | comment -----------+----------------+-----------+-------------------------------------------------------------------------------------------------------------------- 0 | ALGERIA | 0 | haggle. carefully final deposits detect slyly agai 1 | ARGENTINA | 1 | al foxes promise slyly according to the regular accounts. bold requests alon 2 | BRAZIL | 1 | y alongside of the pending deposits. carefully special packages are about the ironic forges. slyly special 3 | CANADA | 1 | eas hang ironic, silent packages. slyly regular packages are furiously over the tithes. fluffily bold 4 | EGYPT | 4 | y above the carefully unusual theodolites. final dugouts are quickly across the furiously regular d 5 | ETHIOPIA | 0 | ven packages wake quickly. regu 6 | FRANCE | 3 | refully final requests. regular, ironi 7 | GERMANY | 3 | l platelets. regular accounts x-ray: unusual, regular acco 8 | INDIA | 2 | ss excuses cajole slyly across the packages. deposits print aroun 9 | INDONESIA | 2 | slyly express asymptotes. regular deposits haggle slyly. carefully ironic hockey players sleep blithely. carefull 10 | IRAN | 4 | efully alongside of the slyly final dependencies. 11 | IRAQ | 4 | nic deposits boost atop the quickly final requests? quickly regula 12 | JAPAN | 2 | ously. final, express gifts cajole a 13 | JORDAN | 4 | ic deposits are blithely about the carefully regular pa 14 | KENYA | 0 | pending excuses haggle furiously deposits. pending, express pinto beans wake fluffily past t 15 | MOROCCO | 0 | rns. blithely bold courts among the closely regular packages use furiously bold platelets? 16 | MOZAMBIQUE | 0 | s. ironic, unusual asymptotes wake blithely r 17 | PERU | 1 | platelets. blithely pending dependencies use fluffily across the even pinto beans. carefully silent accoun 18 | CHINA | 2 | c dependencies. furiously express notornis sleep slyly regular accounts. ideas sleep. depos 19 | ROMANIA | 3 | ular asymptotes are about the furious multipliers. express dependencies nag above the ironically ironic account 20 | SAUDI ARABIA | 4 | ts. silent requests haggle. closely express packages sleep across the blithely 21 | VIETNAM | 2 | hely enticingly express accounts. even, final 22 | RUSSIA | 3 | requests against the platelets use never according to the quickly regular pint 23 | UNITED KINGDOM | 3 | eans boost carefully special requests. accounts are. carefull 24 | UNITED STATES | 1 | y final packages. slow foxes cajole quickly. quickly silent platelets breach ironic accounts. unusual pinto be (25 rows) Query 20230720_031745_00001_v2hmn, FINISHED, 2 nodes Splits: 32 total, 32 done (100.00%) [Latency: client-side: 225ms, server-side: 192ms] [25 rows, 0B] [130 rows/s, 0B/s]

执行 Kafka 用例

准备客户端配置文件。

编辑

kafka_client_jaas.conf文件(例如放在/etc/emr/kafka/conf目录。若目录中有重名文件,可更换目录、文件名或修改已存在的重名文件的文件名)KafkaClient { com.sun.security.auth.module.Krb5LoginModule required useTicketCache=true renewTicket=true; };编辑

client.properties文件(例如放在/etc/emr/kafka/conf目录。若目录中有重名文件,可更换目录、文件名或修改已存在的重名文件的文件名)security.protocol=SASL_PLAINTEXT sasl.mechanism=GSSAPI sasl.kerberos.service.name=kafka

生成用户的票据。

kinit -k -t /etc/krb5/keytab/user/user01.keytab user01创建 topic。

export KAFKA_OPTS="-Djava.security.krb5.conf=/etc/krb5.conf -Djava.security.auth.login.config=/etc/emr/kafka/conf/kafka_client_jaas.conf" && /usr/lib/emr/current/kafka/bin/kafka-topics.sh --create --bootstrap-server {broker地址}:9092 --command-config /etc/emr/kafka/conf/client.properties --topic test --replication-factor 3 --partitions 12说明

示例中{broker地址},是指Kafka的Broker的IP地址,需根据具体环境配置。

查看 topic 列表。

export KAFKA_OPTS="-Djava.security.krb5.conf=/etc/krb5.conf -Djava.security.auth.login.config=/etc/emr/kafka/conf/kafka_client_jaas.conf" && /usr/lib/emr/current/kafka/bin/kafka-topics.sh --list --bootstrap-server {broker地址}:9092 --command-config /etc/emr/kafka/conf/client.properties生产消息。

export KAFKA_OPTS="-Djava.security.krb5.conf=/etc/krb5.conf -Djava.security.auth.login.config=/etc/emr/kafka/conf/kafka_client_jaas.conf" && /usr/lib/emr/current/kafka/bin/kafka-console-producer.sh --broker-list {broker地址}:9092 --topic test --producer.config /etc/emr/kafka/conf/client.properties消费消息。

export KAFKA_OPTS="-Djava.security.krb5.conf=/etc/krb5.conf -Djava.security.auth.login.config=/etc/emr/kafka/conf/kafka_client_jaas.conf" && /usr/lib/emr/current/kafka/bin/kafka-console-consumer.sh --bootstrap-server {broker地址}:9092 --group testGroup --topic test --from-beginning earliest --consumer.config /etc/emr/kafka/conf/client.properties

执行 Kyuubi 用例

生成用户的票据

kinit -k -t /etc/krb5/keytab/user/user01.keytab user01连接 Kyuubi

输入下面命令:beeline -u "jdbc:hive2://${zookeeper的地址}/;serviceDiscoveryMode=zooKeeper;zooKeeperNamespace=kyuubi;principal=${Kyuubi服务参数中的kyuubi.kinit.principal参数值}"示例:

beeline -u "jdbc:hive2://10.0.255.9:2181,10.0.255.7:2181,10.0.255.8:2181/;serviceDiscoveryMode=zooKeeper;zooKeeperNamespace=kyuubi;principal=hive/_HOST@2FDE5608A274D0A2320C.EMR.COM"然后输入下面的 SQL 语句:

CREATE TABLE IF NOT EXISTS t3(i INT, s STRING); INSERT INTO t3 VALUES(0, 'a'); SELECT * FROM t3;

执行 Flume 用例

在 /etc/emr/flume/conf 目录下添加配置文件 flume_jaas.conf,内容如下:

KafkaClient { com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true keyTab="/etc/krb5/keytab/serv/kafka.keytab" storeKey=true serviceName="kafka" principal="${principal}"; };说明

示例中 ${principal},需要和 kafka.keytab 文件匹配,通过命令

klist -k -t /etc/krb5/keytab/serv/kafka.keytab获取。在 /etc/emr/flume/conf 目录下配置 Flume 读取 Kafka 的参数文件:flume-kerberos-kafka.properties,内容如下:

a1.sources = source1 a1.sinks = k1 a1.channels = c1 a1.sources.source1.type = org.apache.flume.source.kafka.KafkaSource a1.sources.source1.channels = c1 a1.sources.source1.kafka.consumer.group.id = flume-test-group a1.sinks.k1.type = hdfs a1.sinks.k1.hdfs.path = hdfs://emr-cluster/tmp/flume/test-data a1.sinks.k1.hdfs.fileType=DataStream # Use a channel which buffers events in memory a1.channels.c1.type = memory a1.channels.c1.capacity = 1001 a1.channels.c1.transactionCapacity = 1000 # Bind the source and sink to the channel a1.sources.source1.channels = c1 a1.sinks.k1.channel = c1 a1.sources.source1.kafka.bootstrap.servers = ${broker地址}:9092 a1.sources.source1.kafka.topics = ${topic名字} a1.sources.source1.kafka.consumer.security.protocol=SASL_PLAINTEXT a1.sources.source1.kafka.consumer.sasl.mechanism=GSSAPI a1.sources.source1.kafka.consumer.sasl.kerberos.service.name=kafka说明

- 示例中 HDFS 的路径需根据实际环境修改。

- 示例中 {broker地址},是指 Kafka 的 Broker 的 IP 地址,需根据具体环境配置。

- 示例中 ${topic名字},按照前面 Kafka 的用例创建 topic。

在配置文件 flume-env.sh 中追加参数:

export JAVA_OPTS="$JAVA_OPTS -Djava.security.krb5.conf=/etc/krb5.conf" export JAVA_OPTS="$JAVA_OPTS -Djava.security.auth.login.config=/etc/emr/flume/conf/flume_jaas.conf启动 flume

nohup /usr/lib/emr/current/flume/bin/flume-ng agent --name a1 --conf /etc/emr/flume/conf/ --conf-file flume-kerberos-kafka.properties > nohup.out 2>&1 &